In previous posts, I wrote about the activities I completed in order to take Ghost for a test drive.

Now that my blog is up and running there are a few more tasks that I need to complete so that other people (and search engines) can find my site.

Sitemap

Sitemaps provide an easy way to inform search engines about the content on your site that is available for indexing. By creating and submitting sitemaps to search engines, potential readers will be much more likely to find your blog posts.

You can use a sitemap generator to create and update your blog's sitemap:

- Google's Sitemap Generator

- George Liu's Ghost Sitemap Generator

- Marco Mornati's Ghost Sitemap Generator

I used George Liu's shell script to create my blog's sitemap. First, I made a copy of the script and then updated two variables:

url="robferguson.org"

webroot='/var/www/ghost'

And, while I was testing the script I enabled the debug variable:

debug='y' # disable debug mode with debug='n'

Then, I copied the updated shell script to my droplet, made the script executable, and created a soft link to a directory already in my path:

scp -P 19198 /Users/robferguson/Documents/ghostsitemap.sh rob@128.199.238.4:/home/homer/ghostsitemap.sh

cp /home/homer/ghostsitemap.sh /usr/local/sbin

sudo chmod +x /usr/local/sbin/ghostsitemap.sh

sudo ln -s /usr/local/sbin/ghostsitemap.sh /usr/sbin/ghostsitemap.sh

To create a sitemap, enter the following command:

sudo ghostsitemap.sh

Now, check the directory where you installed Ghost (webroot='/var/www/ghost') for your new sitemap.xml.

We also need to create a robots.txt file. I used nano:

cd /var/www/ghost

sudo nano robots.txt

And, update it as follows:

User-agent: *

Sitemap: http://robferguson.org/sitemap.xml

And finally, we need to add the following directives to our Nginx configuration:

location ~ ^/(robots.txt) {

root /var/www/ghost;

}

location ~ ^/(sitemap.xml) {

root /var/www/ghost;

}

Here's my complete Nginx configuration file:

server {

listen 80;

server_name robferguson.org;

access_log var/log/nginx/robferguson_org.log;

location / {

proxy_pass http://127.0.0.1:2368;

proxy_redirect off;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

}

location /ghost {

return 301 https://$host$request_uri;

}

location ~ ^/(robots.txt) {

root /var/www/ghost;

}

location ~ ^/(sitemap.xml) {

root /var/www/ghost;

}

}

server {

listen 443 ssl;

server_name robferguson.org;

access_log /var/log/nginx/robferguson_org.log;

ssl_certificate /etc/nginx/ssl/robferguson_org.crt;

ssl_certificate_key /etc/nginx/ssl/robferguson_org.key;

ssl_session_timeout 5m;

ssl_protocols SSLv3 TLSv1;

ssl_ciphers ALL:!ADH:!EXPORT56:RC4+RSA:+HIGH:+MEDIUM:+LOW:+SSLv3:+EXP;

ssl_prefer_server_ciphers on;

location /ghost {

proxy_pass http://127.0.0.1:2368;

proxy_redirect off;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

}

location / {

return 301 http://$host$request_uri;

}

}

Don't forget to restart Nginx:

sudo service nginx restart

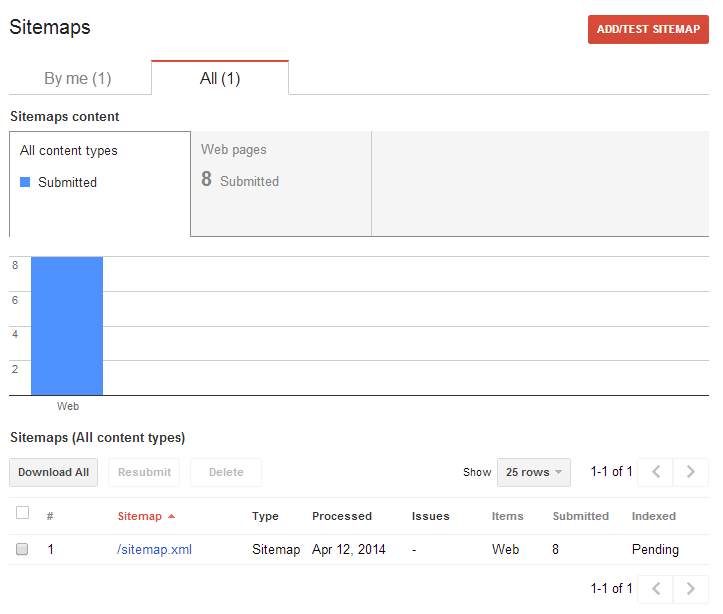

Now, your ready to submit your sitemap to Google Webmaster Tools:

Scheduled Sitemap Updates

We can update crontab:

sudo crontab -e

And, schedule our Ghost sitemap generator to run every night at midnight:

0 0 * * * /usr/sbin/ghostsitemap

It will update our sitemap and resubmit it to Google Webmaster Tools.

Note: Make sure you disable the script's debug variable and remember that Ubuntu, like Debian, won't run scripts from /etc/cron.* that include an extension:

sudo ln -s /usr/local/sbin/ghostsitemap.sh /usr/sbin/ghostsitemap

Next Steps

You might also like to take a look at Google Tag Manager.